UX & self-service scorecards: Two sides of the same customer journey coin

I created these scorecards as a way to show the combined efforts towards improving the user experience of both of my teams : UX and content strategy.

Objectives

- Benchmark as-is state in order to show improvements over time

- Gain executive support to scale team and research initiative, securing resource allocations and budget, as well as getting UX a seat at the leadership table.

Challenges

- None of this data existed, so needed to do everything, including launching surveys, determining how to measure self-service score, adding JS to track ticket deflection rate, working with devs to extract error messages, initiating and implementing onboarding, setting up analytics to measure onboarding, and much more.

- At a tech startup, I was designing at least half of all features on a monthly release cadence, so I did not have much time to dedicate to this.

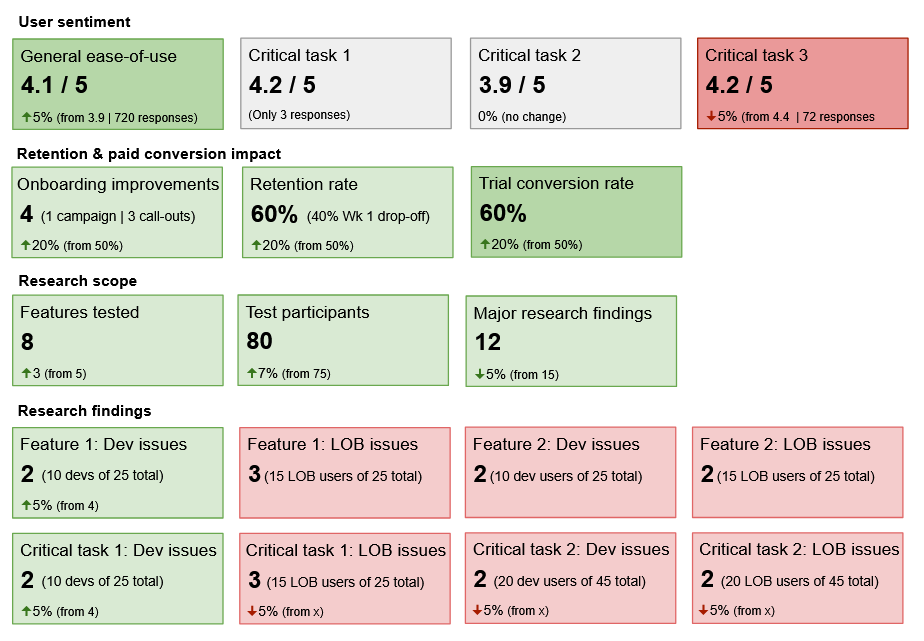

Measuring the customer journey user experience

In order to set the to-be state, I had to understand the as-is state. These are the items I focused on:

- User sentiment: This included general ease-of-use, as well as those for critical tasks.

- Impact of onboarding on user retention (during the 30 days of free tria ) and trial conversion rate.

- Scope of research: When I started, all research was done ad-hoc with customers willing to donate their time, as there was no budget. Today, there is a a dedicated research budget thanks to these efforts.

- Research findings across main personas of developer/IT admin and LOB (line of business) users for particular features and critical tasks. These critical tasks were creating flows and managing errors, the two core functions users needed to do on the data integration platform.

Note: Green and red in these scorecards are used as a visual indicator of what I wanted to emphasize and call attention to during an executive business review. Shades of green and red could also be used to indicate degree of positive or negative impact.

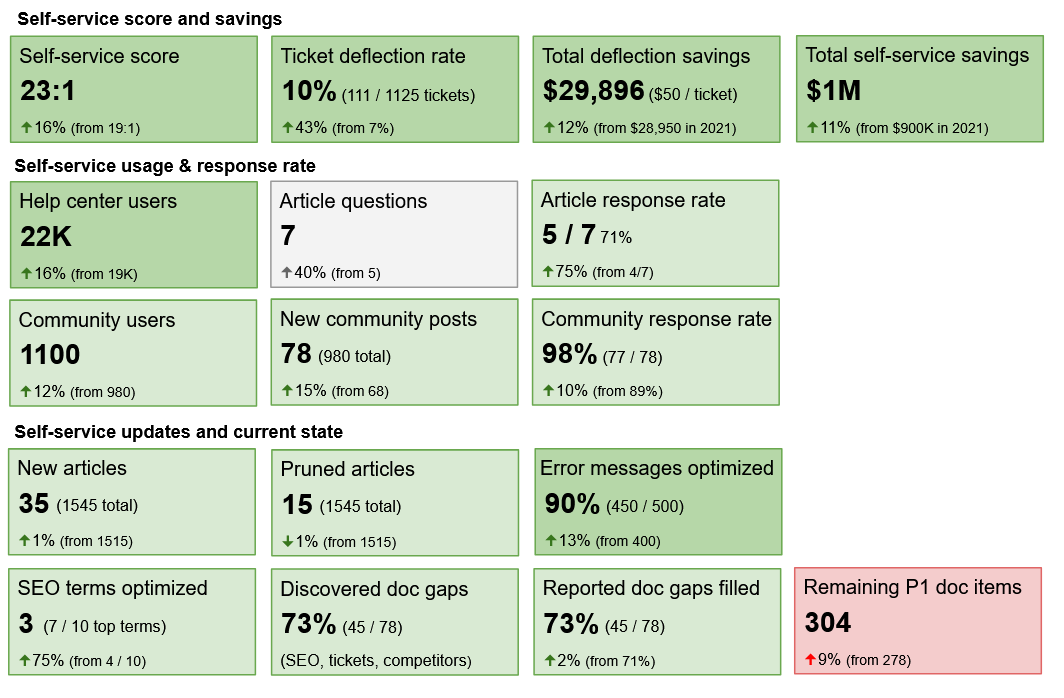

Measuring self-service

In order to set the to-be state, I had to understand the as-is state. These are the items I focused on:

- Self-service score and monetary savings: This included self-service score, ticket deflection rate. I worked with the head of support to get the cost per support ticket (changed here to $50/ticket). Reported on running total saved from both self-service and ticket deflections. Ticket deflections were initially counted whenever a user went to create a support ticket, but then clicked an article or community post suggestion, but after building an engaged self-service community, I included community responses to customer questions in this rate, to give a more accurate representation of support savings.

- Self-service usage & response rate: This included total number of Help center and community users, the number of article questions and community posts, and the rate of response, either internally or externally.

- Self-service updates and current state: This included new articles that were added, outdated articles that were pruned, error messages that were optimized for quicker troubleshooting, SEO terms that were optimized, doc gaps reported internally or externally, doc gaps discovered during SEO, ticket analysis, competitor analysis, or other research. Because the highest priority doc backlog kept growing, this gained support for scaling the team.